Optimising iRacing update sizes by 20% with this one simple trick!

I’m always on the lookout for new ways to procrastinate, and what better way to do that than by delving into the inner workings of my favorite time-suck, iRacing? So, I took a break from virtual racing to figure out how updates are packaged and installed. Little did I know, this quest would lead me down a rabbit hole, but hey, at least I’ll be a pro at updating my iRacing game.

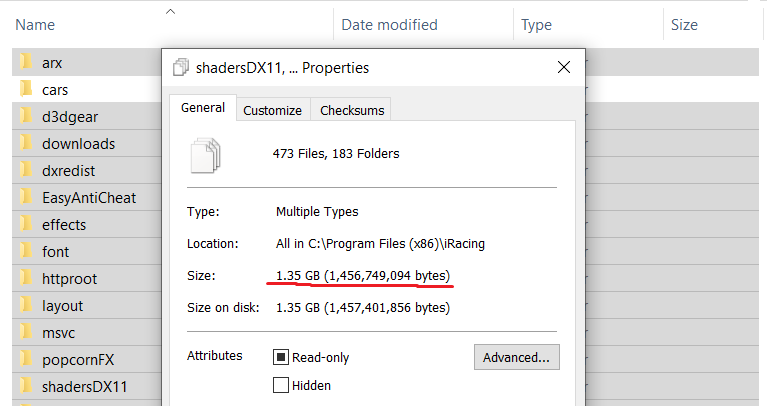

How big is iRacing?

The update packages for iRacing are big. For perspective, my iRacing directory, minus the tracks and cars (which are installed individually and separately), is 1.35GB:

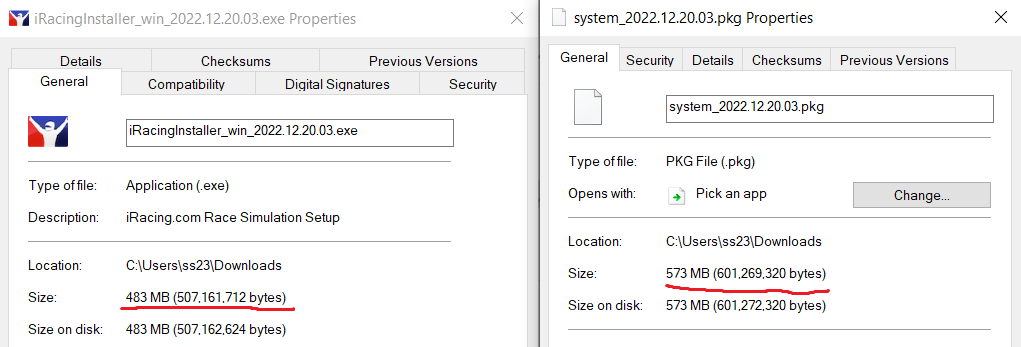

And here is the size of an update package, compared with a fresh install package:

With only 483MB to install iRacing from scratch, a system update package size of 573MB seems really excessive.

What is an update made of?

Through the rigorous application of guessing hard work and perseverance, I was able to write some code that can unpack an iRacing update and view the underlying uncompressed files. In the case of a system update package like this, it’s just a copy of the iRacing directory, with all files contained in it (along with some metadata to delete files that are no longer required):

$ python ./extract-package.py system_2022.12.20.03.pkg extract/

Detected 201 files

Signature: be113d051f9bc9c1cc3130e0b5392412ef097f58e592576162074b0c1516a0823ec0a62d530d76a06f82e9c860db54414a82e26d76717c4c6ad2beef4698b162fa54f10871008597247a1a51648c01b2a2902dffeb524145c56b0730c9be92f7beb2f622021b5a3614d92564dea31111c55f7d1e81f54fc3c81f9dc124bf67e4

This update package unpacks to 1083MB of files.

How are updates compressed?

You may be surprised to learn that the compression algorithm in use for packaging updates is DEFLATE, invented in 1993. While the compression ratios you can get from it aren’t horrible, the time required to compress or decompress the files definitely is. A quick look at some representative benchmarks shows DEFLATE (as implemented by zlib) as being 5x slower to compress and 4x slower to decompress than a modern algorithm like zstd.

So, what kind of improvements could be seen with a switch?

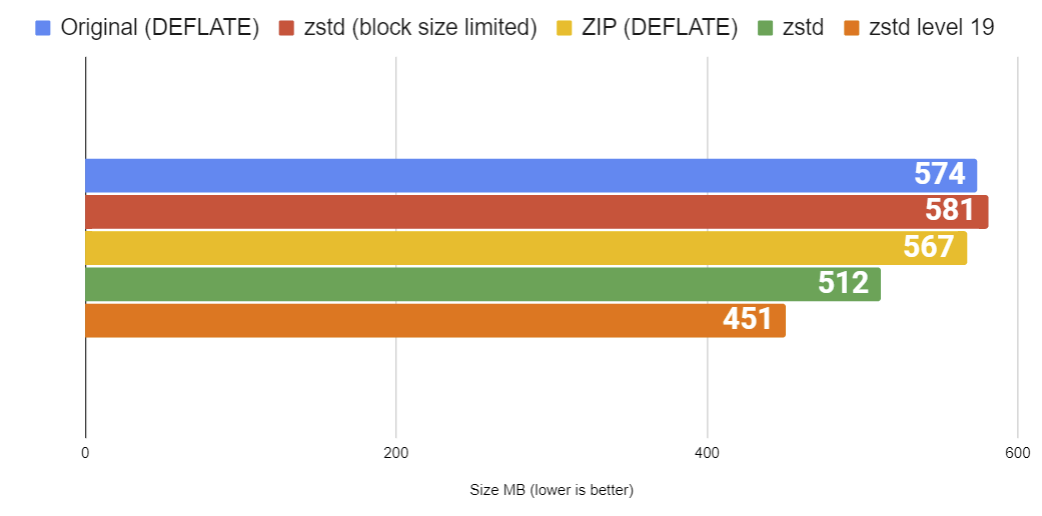

Benchmarking it

Since I’ve already implemented code to generate and unpack an update package, we can just measure it directly. All the tests are being performed on the files from system_2022.12.20.03.pkg.

| Algorithm | Size |

|---|---|

| Original (DEFLATE) | 574 MB |

| zstd | 581 MB |

Huh?! Migrating to a newer algorithm didn’t help at all! To understand why some more understanding of the package format is required.

Block size limits and other problems

When decompressing packages from iRacing, I noticed that it was never the case that a compressed block had more than 0x20000 bytes of uncompressed data stored in it. While not something I was familiar with, I’m told this is actually a relatively common way of providing two features of a given compression scheme:

- The ability to seek to an arbitrary location in a compressed file, reading at most 0x20000 extra compressed bytes or so. This is because if we know that each data block is 0x20000 bytes, we can skip forward block by block without bothering to actually decompress anything.

- Easily parallelized decompression. Since each block is compressed independently, decompression can be performed independently too, allowing for each CPU thread to be busy.

Strangely, I don’t think either of these features are actually useful for iRacing. The first is never going to be used, as updates are unpacked entirely, and never require seeking to a specific place in a file. The second because each file is already compressed independently. This means that you can parallelize decompression across files, waiting at most for the largest file to finish. Even in that case, if decompression speed was a desired property of the system, using DEFLATE like this is already massively slower than something like zstd. To demonstrate the inefficiency of this method of splitting files, here’s an example from a file that contains large blocks of repeating data:

iRacingSim64DX11.exe

Uncompressed size: 176062648 0xa7e80b8

Datablock size : 10068119 0x99a097

[...]

Compressed size : 151 0x97 <- **What is going on here?**

Uncompressed size: 131072 0x20000

Compressed size : 151 0x97

Uncompressed size: 131072 0x20000

Compressed size : 151 0x97

Uncompressed size: 131072 0x20000

Because this file contains a large number of NULL bytes (0x00), and the package compression splits blocks up every 0x20000 bytes, we’re getting a degenerate case where massive amounts of easily compressible data is being stored hundreds of times less efficiently than it could be. The size of the metadata of each block is at least 24 bytes, which means over 10% of the size of each of these compressed blocks is just metadata!

For comparison, if we just zipped up the extracted files, we’d likely see better results than the iRacing implementation, even though the ZIP format uses DEFLATE too, just because blocks aren’t being split in this particular way.

| Algorithm | Size |

|---|---|

| ZIP (DEFLATE) | 567 MB |

Coming in at 567MB, it’s the smallest we’ve seen yet! Now, what if we apply this idea of not constraining block size to zstd? This is simple, even with the existing code, as we can just modify the maximum block size from 0x20000 to something significantly higher than any file stored in the archive. Voilà, we have a package with an unlimited block size.

| Algorithm | Size |

|---|---|

| Original (DEFLATE) | 574 MB |

| zstd (block size limited) | 581 MB |

| ZIP (DEFLATE) | 567 MB |

| zstd | 512 MB |

Finally, we’re making some progress! That’s about 10% better than the original, and we haven’t modified the package format at all.

Those are rookie numbers

For some final improvements, zstd has some knobs you can twiddle to get maximum performance, at the price of increased compression and decompression time. I’ve cranked those dials as far as practical, and the results are better yet again!

| Algorithm | Size |

|---|---|

| Original (DEFLATE) | 574 MB |

| zstd (block size limited) | 581 MB |

| ZIP (DEFLATE) | 567 MB |

| zstd | 512 MB |

| zstd level 19 | 451 MB |

Final results

Well, it looks like we uncovered the secret to smaller and faster iRacing updates: ditching that outdated compression algorithm from 1993 and embracing the future with zstd. Not only did we see a whopping 20% improvement in package size, but even sticking with the default zstd options could result in a substantial speedup of decompression.